So, how busy were you patching after the worm outbreak last month? Hopefully not very busy - hopefully you had

MS17-010 patched in March soon after it was released. Unfortunately it appears that many did not, which begs the question, "Why not?". I am sure there are many reasons - I have heard many of them myself in almost every organization I have worked for. So what to do about SMB now that we have seen this worm and others actively taking advantage of unpached systems? Few mitigations are as good as applying the patch. Please patch quickly and thoroughly! Another good option is to

stop using SMB1 - although there are still issues with

SMB2 that also need to be patched.

But what can be done if none of these are options? What if you need SMB, but machines can't be patched, or they could be patched but can't be rebooted... because of uptime requirements, or business criticality, or the moon is made out of cheese, or whatever other bad reason for not patching exists... another mitigation option is to block SMB with a firewall. For SMB worms, blocking SMB at the edge of your network and through VPN tunnels is a great start, but what if it gets inside the network through some other form? As long as none of the end users execute untrustworthy email attachments or browse the internet while using a local admin account then things are fine. 😉 For discussion sake let's just say there is a very remote chance that these (or other) SMB exploits cold run on an internal machine on some hypothetical network somewhere. Once the worm is in, the fact that it is remotely exploitable means that it can spread to other systems quickly and have a wide impact if not mitigated internally as well.

One way to stop the risk from unpatched (or unknown vulnerable) systems on the network is to use an endpoint firewall to block the service where it is not needed. For example, almost all workstations need SMB enabled outbound when they are acting as a client, especially to things like domain controllers, file servers, and print servers. But how many things

really need inbound access SMB shares on

workstations? There are a few use cases I could think of, but not many. For example, many admin functions that require SMB inbound to a workstation could be limited to hardened jump boxes or severs. Blocking SMB access from a workstation to other workstations can also have a substantial benefit - things like making

workstation to workstation pivoting much harder. This is a micro implementation of that old adage of network segregation/zones, and there is a lot of benefit from blocking just this one protocol.

So if this can help us limit the risk to workstations, how can we limit risk to our servers? Important servers like domain controllers, file servers, and print servers often require SMB and should always be allowed; because of that they should be prioritized to be patched quickly. Outside of these, many servers don't need SMB so it can be turned off, or if a small amount of SMB is needed it can be allowed for just some devices and the rest can be blocked with inbound rules on their endpoint firewall.

If there are servers that can't have the endpoint firewall deployed or SMB service disabled, another option is to create an

outbound rule in Windows Firewall with Advanced Security on as many workstations as possible to block traffic destined to SMB ports on devices that are known to be vulnerable. This isn't guaranteed to stop malicious activity, the firewall could potentially be disabled or the initial infection could be from a device without an outbound firewall. But if it is pushed out via GPO many of the highest risk machines could stop most automated infection vectors, which is better than nothing. If there is a similar scenario like this in the future with SMB or another protocol, the same mechanism could be deployed to protect vulnerable devices as a temporary protection until patching/rebooting could kick in.

Here is what an outbound rule to protect other vulnerable devices could look like (high level):

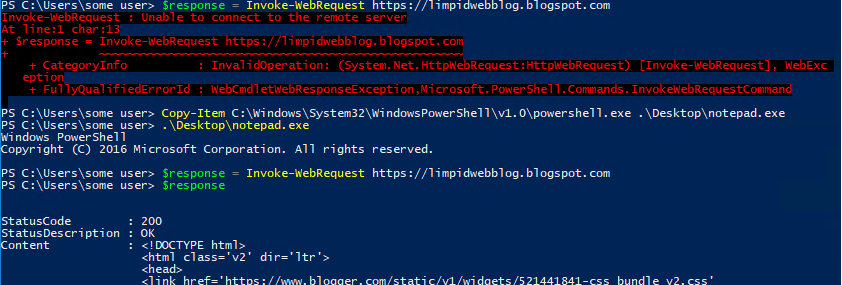

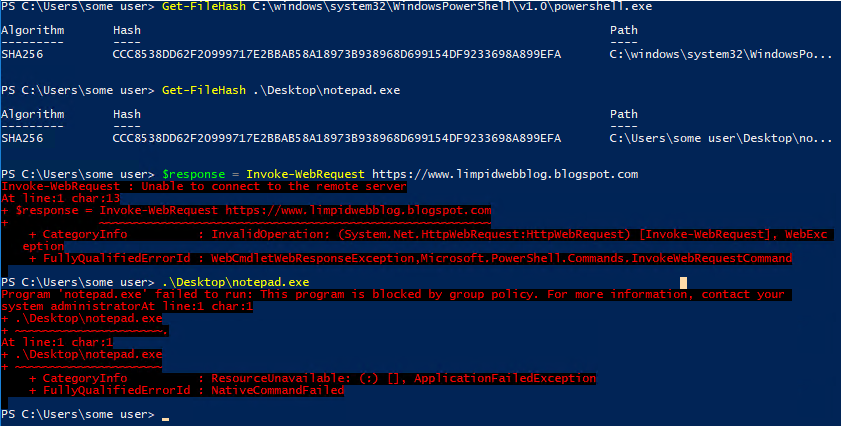

- Have outbound block rules with inverse blacklists for commonly abused applications (like I have covered in previous posts - especially for powershell.exe and mshta.exe)

- Configure an outbound block rule for protocol TCP/445 (SMB), and use inverse ranges to exclude (go around) servers/devices that have been patched and restarted (preferably things like Domain Controllers, Print Servers, File Servers, etc. that are patched quickly - I do not recommend blocking these). This would be similar to the inverse ranges configured for applications as discussed in previous posts, the difference for this rule would be to allow for Any application, and then specify a protocol, and this time the "skipped" IPs would likely be on an internal network instead of the internet.

- Have the default action be Allow.

As devices are patched the inverse list can be modified to "go around" them, allowing traffic to them but blocking other devices that are not patched. In the case of an infection the initial point of entry would be lost, and in many cases accessible file shares could be encrypted (in the case of ransomware), but this could stop the worm from spreading to other workstations or servers that would be vulnerable. This could limit the damage significantly, and instead of having a shutdown of all devices could just be a file restore for the affected shares and workstation re-deploy for the initial infection.

This could be used for other protocols/ports, it certainly would not be limited to SMB. Hopefully there would be enough warning to configure this and push it out to all devices before a new attack hit. A better scenario would be to proactively enable WFP audit logs, forward them to a central location, and analyze them to come up with a proactive list of outbound protocol access to many popular services and only allowed for the destinations that really need them. Here is possible

list of places to start - except for really common ports like 80 and 443. If this could be configured and deployed before something was released the chances of having it spread on your network would be much lower. There is also the added (marginal) benefit of becoming aware of when someone is trying to use a new service without going through proper channels. Maybe that server admin wasn't authorized to install MySQL, or maybe that server (or workstation!) wasn't supposed to have a file share or SMTP services configured on it. With something like this in place, the workstations couldn't connect to it without the rules being reconfigured... which provides an opportunity for discussion of securing and managing this new app before it is deployed to production.

I hesitated to publish this, I still recommend patching as quickly as possible, but I hope that this post shows creative ways that the Windows Firewall with Advanced Security can be used to quickly block a specific risk without the risks of deploying a full workstation firewall allow list.

As hinted before I have some interesting things in the works for AppLocker. The hard part is done and now I am polishing it up, I hope to have it published in my next post.

Until then, work hard and spend time with your family.

Branden

@limpidweb